“The worth of a state in the long run is the worth of the individuals composing it; and a state which postpones the interests of truth to the interests of quiet is a despotism, no matter what its form may be.”

— John Stuart Mill, On Liberty (1859)

The Architecture of Control

The Disinformation Industry was once mobilised to defend democracy from foreign interference. Now, it governs speech at home. Fact-checkers act as gatekeepers, platforms enforce ideological alignment, and NGOs operationalise censorship through “civic” partnerships.

Institutions evolved tactical defence into strategic narrative control, shaping not just responses to falsehoods but the boundaries of permissible truth.

This is not a glitch in liberal democracy. It is its epistemic infrastructure.

Strategic Inception: Naming the Threat

Every regime controls reality by controlling the vocabulary of threat.

“The definition of ‘disinformation’ often lacks clarity and varies by context, which reflects national security priorities and legal systems.” — Lim, Disinformation as a Global Problem (2020) [5]

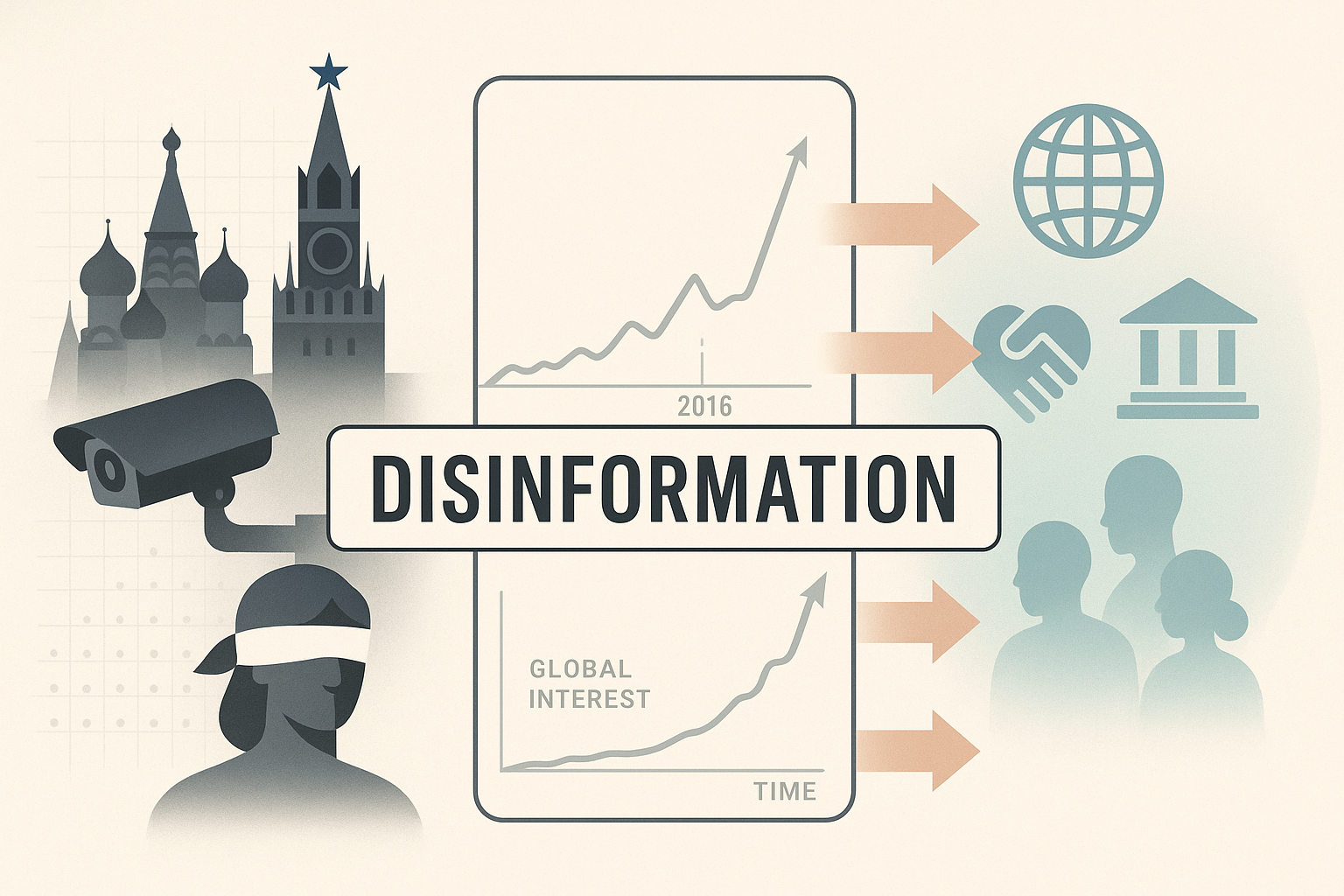

“Disinformation” was once a tactical term—used to describe deliberate, state-sponsored falsehoods targeting adversarial populations. Since 2016, however, the term has broadened, lost its precision, and gained institutional utility. RAND’s 2021 study concedes that Western governments often lack clear definitions that distinguish hostile influence from protected political expression [1]. Within this ambiguity lies power.

As Lim [5] explains, each regime tailors its disinformation framework to meet internal legitimacy needs. In the West, this fluidity has enabled a self-reinforcing architecture: threat inflation justifies policy expansion; platform compliance obscures enforcement; dissent is reclassified as contamination.

This transformation is no accident. It represents the institutionalisation of epistemic control—driven less by truth defence than by the preservation of interpretive sovereignty.

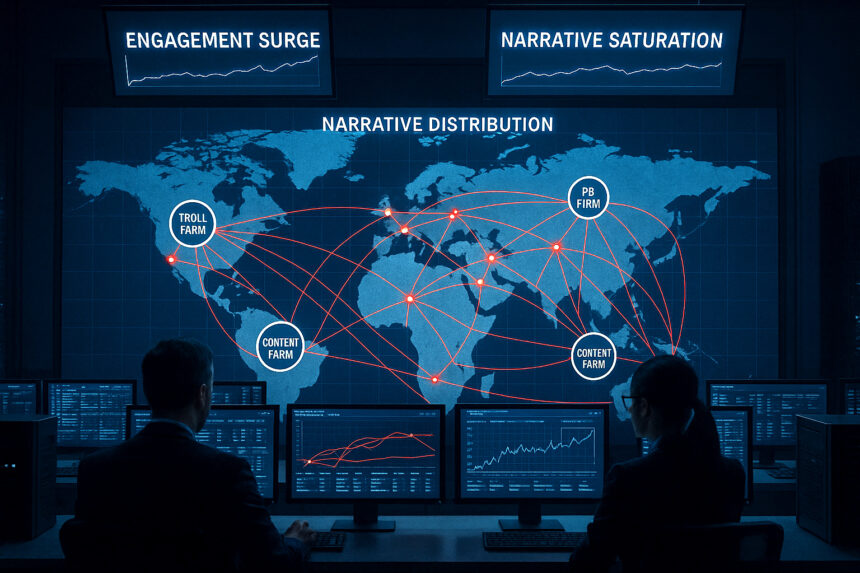

The Sovereign Disinformation Loop: A Five-Stage Framework

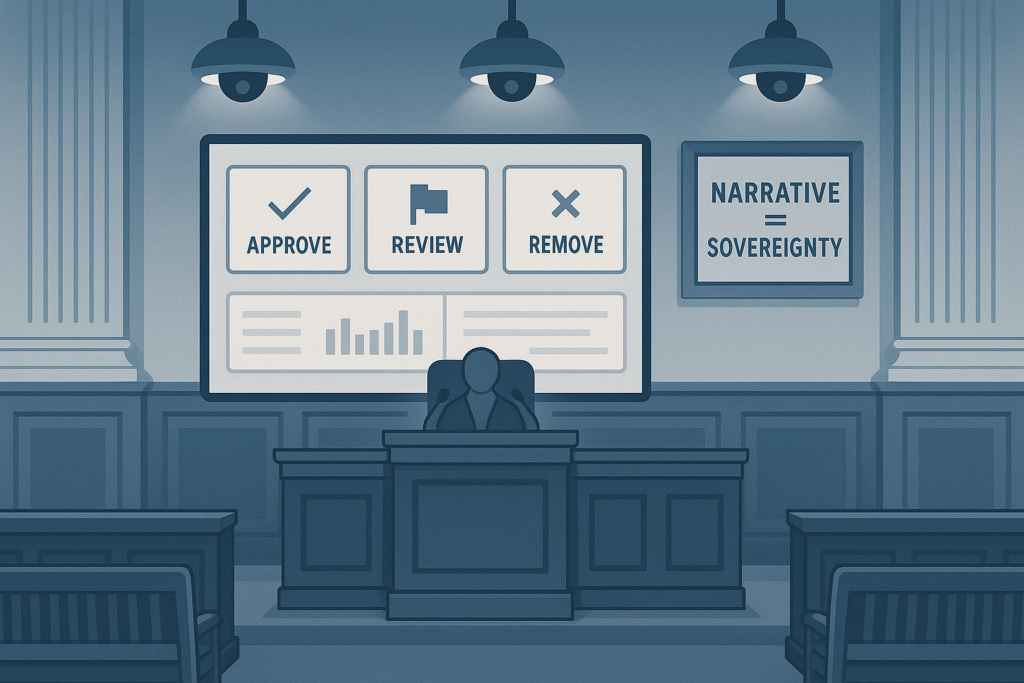

Governments do not merely counter disinformation—they manufacture the need for its control.

“The conscious and intelligent manipulation of the organized habits and opinions of the masses is an important element in democratic society.” — Edward Bernays, Propaganda (1928) [6]

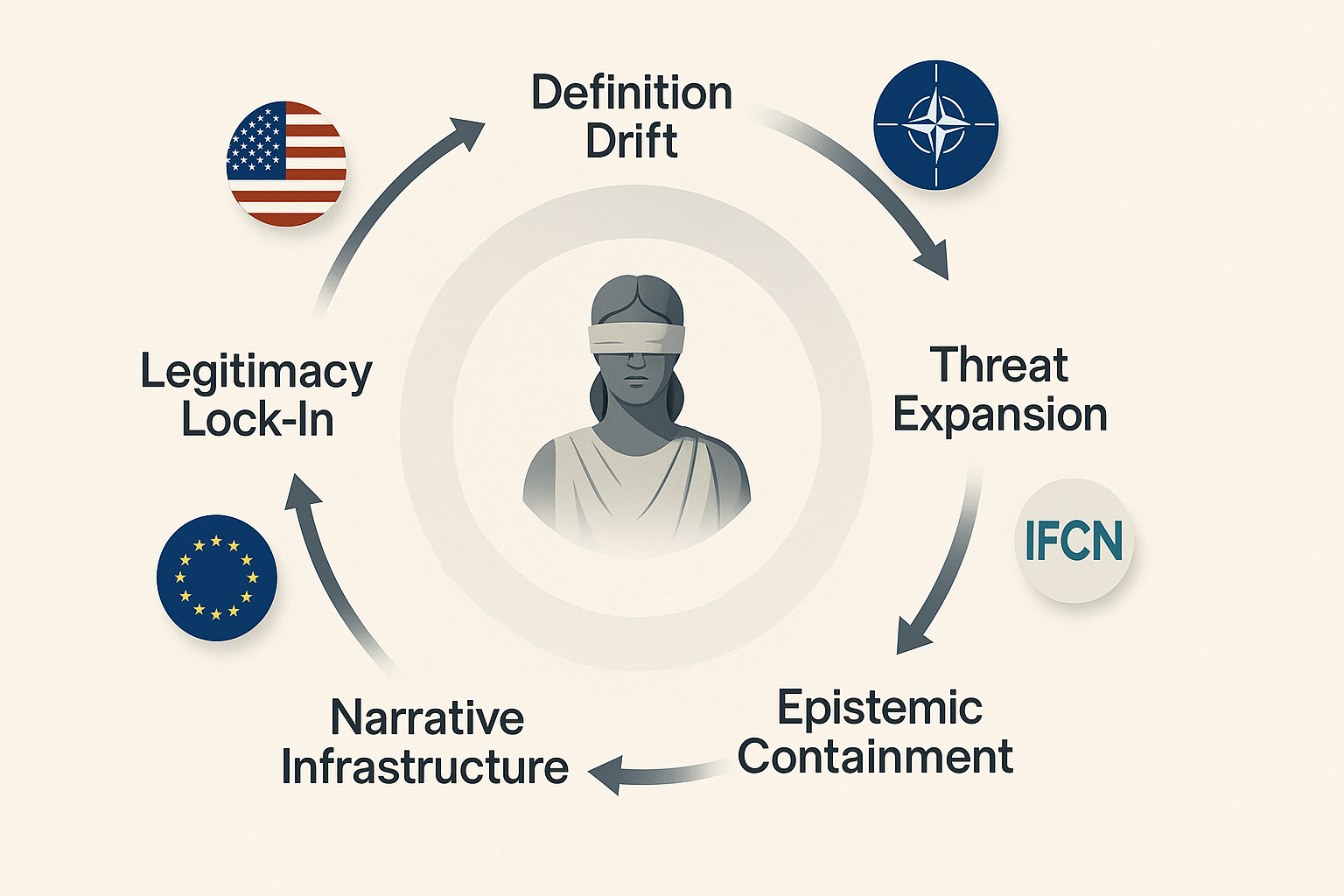

A strategic loop captures this evolution by mapping how disinformation governance mutates from tactical defence into systemic authority:

- Definition Drift – Ambiguity around “disinformation” enables expansion into misinformation, malinformation, and dissent [5].

- Threat Expansion – Strategic communication actors align disinformation with national security, blurring domestic and foreign spheres [1].

- Narrative Infrastructure – Fact-checking networks, literacy consortia, and NGO partners establish interpretive authority [2].

- Epistemic Containment – Platforms and classifiers enforce narrative limits invisibly through algorithms and content moderation [3].

- Legitimacy Lock-In – The disinformation threat becomes permanent, and so does the system that defines and polices it.

Western systems implement this loop through the aesthetics of civil society, mirroring China’s model of “cyber sovereignty” and Russia’s doctrine of “reflexive control.”

Institutional Capture of Verification Systems

Control over what counts as truth is more powerful than control over what is false.

“Mass media function to mobilize support for the special interests that dominate the state and private activity.” — Herman & Chomsky, Manufacturing Consent (1988) [9]

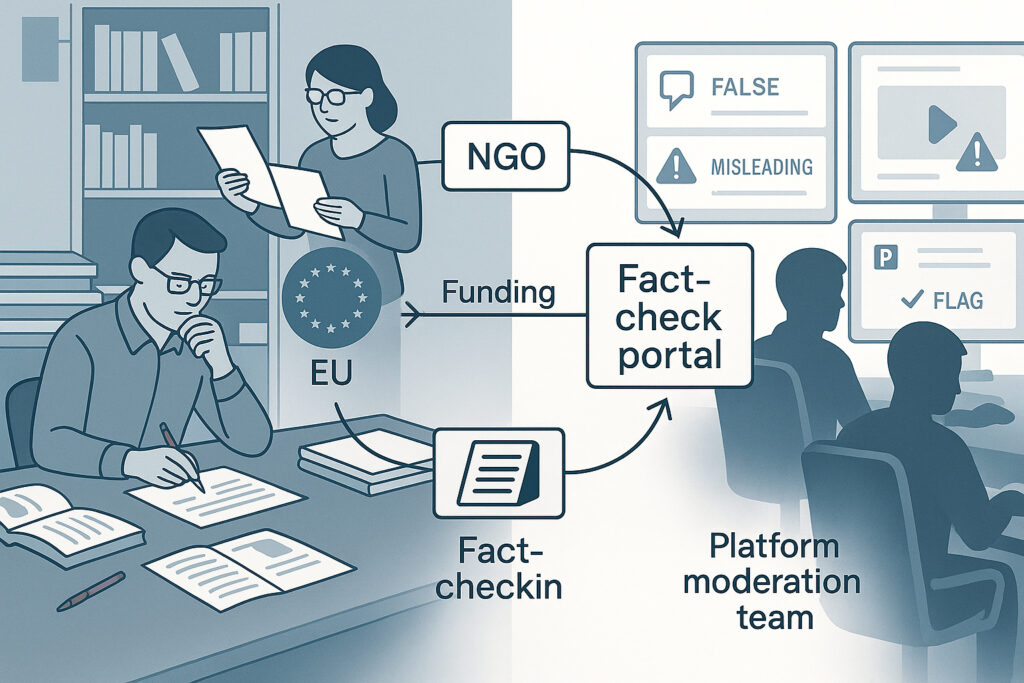

The elevation of fact-checking into a strategic apparatus has transformed its purpose. Pamment’s comparative study shows that fact-checking—once journalistic and adversarial—now functions as a soft governance tool [2]. Institutions no longer contest competing accounts—they construct and credential the baseline.

Willemo (2019) [12] and Marcellino (2020) [3] show how these systems now operate within platform pipelines. Algorithms detect not falsehood, but deviance. Dissent, stripped of nuance, becomes a flag.

Western verification culture mirrors Russian reflexive control by actively shaping the adversary’s perception of what is true, permissible, and visible, thereby inducing specific behaviours. Unlike its Russian counterpart, Western systems outsource this control to NGOs and present it under the guise of neutrality.

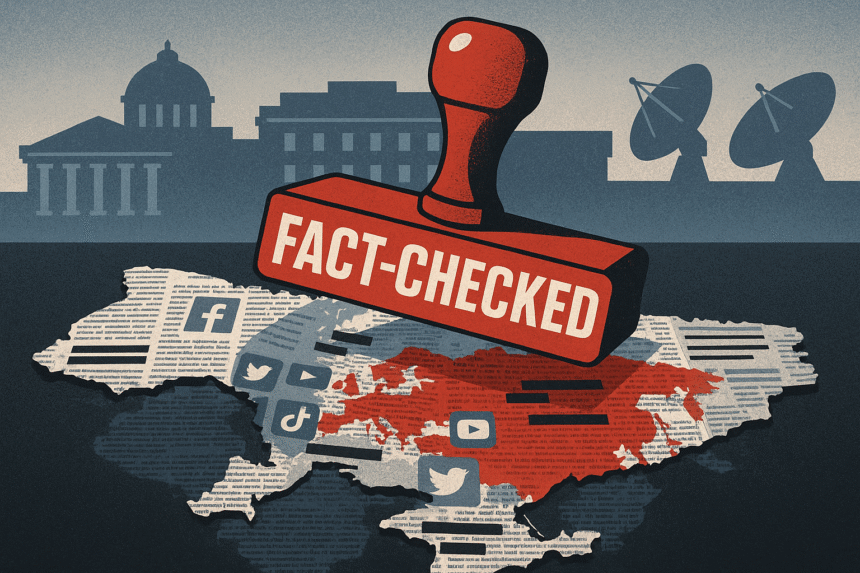

Disinformation as Strategic Rhetoric

To label something as disinformation is to pre-emptively disqualify it from participation in the public sphere.

“The individual who searches for truth must stand outside the mass. But in modern democracies, truth must be managed, for it cannot be relied upon to emerge by itself.” — Walter Lippmann, Public Opinion (1922) [10]

The disinformation label now functions as a rhetorical weapon—designed not to diagnose deception but to isolate deviation. Its power lies in its pre-emptive delegitimisation.

EU DisinfoLab’s 2023 report [4] flagged dissenting COVID-19 and Ukraine commentary as Kremlin-aligned. Analysts inferred attribution through associations, tone, or thematic alignment, without directly proving it. This approach mimics Chinese media law, which defines subversion based on attitude rather than action.

NATO StratCom doctrine intensifies this trend. Once concerned with resilience, “cognitive security” is now framed as a battlespace, where truth is a protective asset and dissent becomes risk [4].

Philosophical Interlude: Post-Truth as Governance

Post-truth is not a crisis—it is a method of rule.

“In a world that is becoming too complex to understand, the control of representation is the final domain of sovereignty.” — Jean Baudrillard, Simulacra and Simulation (1981) [11]

Baudrillard warned that truth under modernity becomes a simulation—a representation that masks and replaces the real. In this light, Western fact-checking does not restore the real; it ratifies the authorised.

Platform warnings like “false” or “missing context” do not correct. They realign. Foucault’s truth regimes—circulating within power systems—are now enforced by algorithmic governance [3].

Unlike authoritarian states, Western systems maintain plausible deniability through delegation: control is distributed, not declared. But its effects are the same—compliance through perception management.

Manufactured Visibility and Algorithmic Legibility

Visibility is not a right—it is a licensed privilege, revoked by deviation.

“There is no standard Google anymore… your monitor is a kind of one-way mirror.” — Eli Pariser, The Filter Bubble (2011) [8]

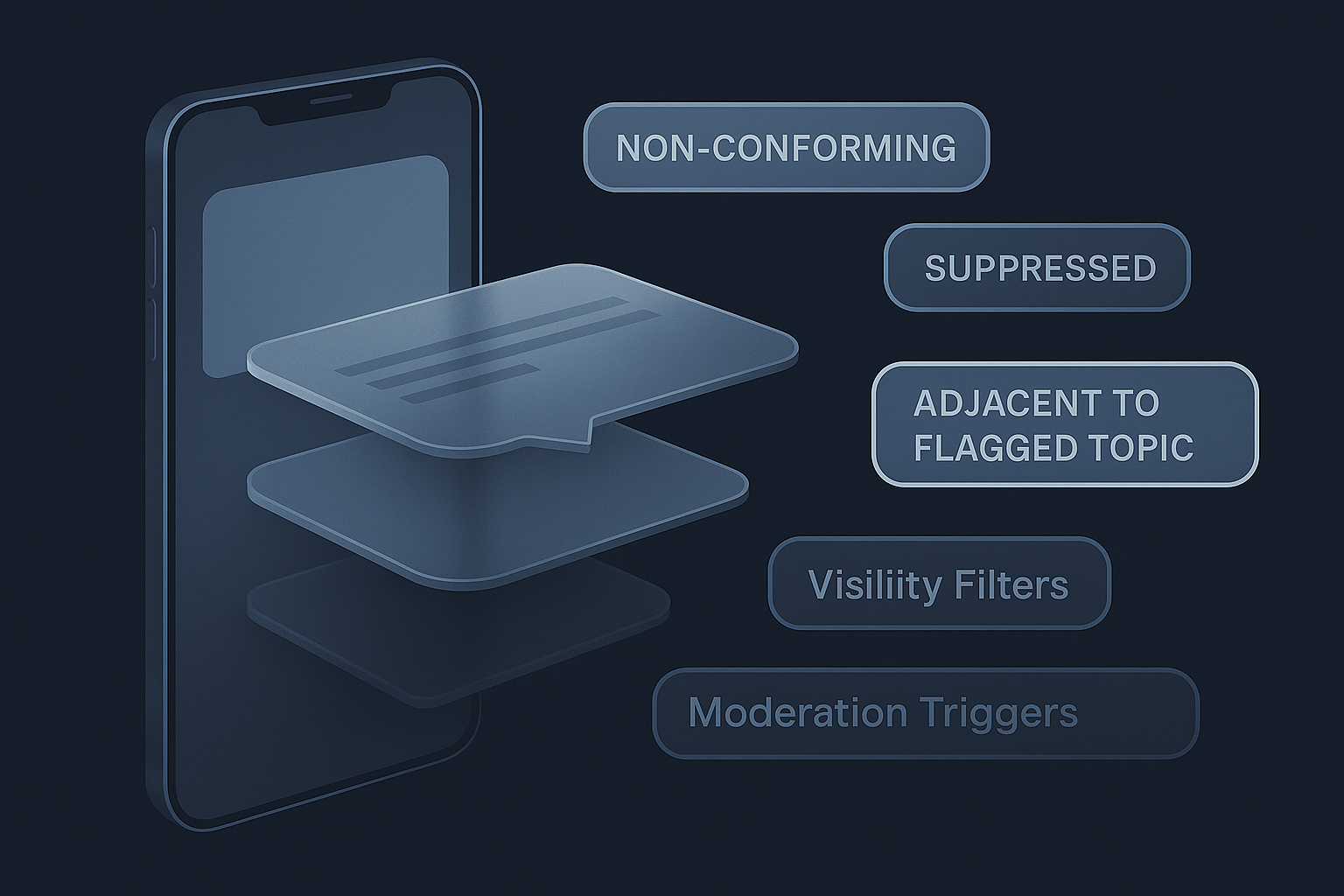

The filter bubble was never about personalisation. It was about partitioning the public. Who sees what—and in what frame—is now dictated by risk compliance matrices [8].

Marcellino’s studies confirm that detection tools actively penalise framing anomalies. They trap benign actors in suppression loops—not for spreading falsehoods, but for aligning too closely with flagged themes. [7].

This system weaponises attention through controlled amplification, mirroring China’s “public opinion guidance systems.”In the West, demotion and silence replace removal—more invisible, less deniable, equally effective [3].

Conclusion: Sovereignty and Narrative Lock-In

In the information age, sovereignty is narrative control enforced through epistemic infrastructure.

“The conscious and intelligent manipulation of the organized habits and opinions of the masses is an important element in democratic society.” — Edward Bernays, Propaganda (1928) [6]

Western disinformation frameworks were born from defensive logic. But over time, they have morphed into a civic-military epistemic regime—where state-adjacent actors, platforms, NGOs, and intelligence-linked outlets form a distributed but coherent system [2][3][4].

This system does not imprison—it omits. It does not silence—it redirects. Legitimacy becomes a function of narrative alignment, not factuality. Divergence becomes disinformation by default.

The irony is structural. In seeking to defend democracy from information war, Western institutions have adopted the same cognitive tools as their adversaries. They have simply outsourced the machinery and rebranded the method.

Call to Action

If this briefing reshaped your understanding of information governance, help us go further.

🔍 Subscribe to receive our forensic investigations into narrative warfare and institutional epistemology.

📣 Share this report to expand public awareness of structural censorship.

💠 Become a patron to fund sovereign civilian intelligence: patreon.com/frontlineeuropa

References

- RAND (2021). Combating Foreign Disinformation on Social Media

- Pamment, J. (2021). Fact-Checking and Debunking: A Comparative Study

- Marcellino et al. (2020). Human–Machine Detection of Online-Based Malign Information

- EU DisinfoLab (2023). Kremlin Narrative Strategy: Pre/Post Invasion

- Lim, M. (2020). Disinformation as a Global Problem

- Bernays, E. (1928). Propaganda

- Marcellino et al. (2020). Counter-Radicalization Bot Research

- Pariser, E. (2011). The Filter Bubble

- Herman, E. & Chomsky, N. (1988). Manufacturing Consent

- Lippmann, W. (1922). Public Opinion

- Baudrillard, J. (1981). Simulacra and Simulation

- Willemo, J. (2019). Trends in Malicious Use of Social Media