Signal Intercept

Originally designed to counter misinformation, fact-checking has evolved into a core mechanism for narrative enforcement. No longer grounded in journalistic neutrality, Weaponised Fact-Checking operates as a transnational tool of epistemic control. Platforms, aligned institutions, and strategic communication agencies are increasingly relying on this system to shape perceptions and regulate discourse. This article draws on foundational insights from RAND, Pamment, Bernays, Lippmann, and others to trace the transformation of fact-checking from a verification process to a censorship infrastructure.

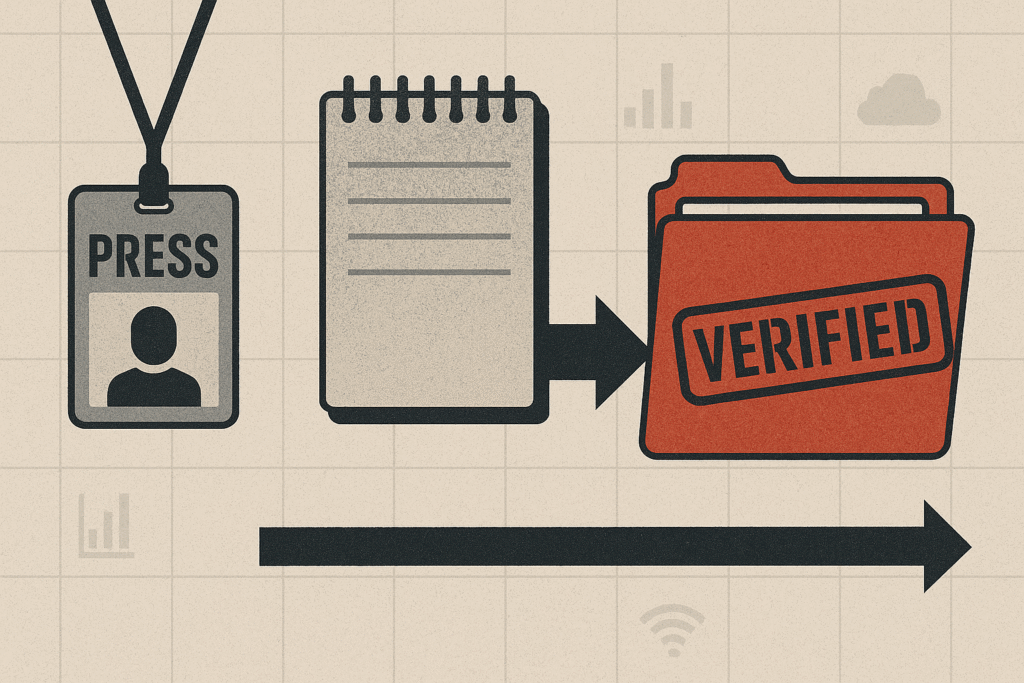

1. The Shift from Verification to Enforcement

Initially, fact-checking emerged as a method for correcting media inaccuracies and exposing political deception. However, Pamment highlights its evolving role:

“Verification has become a proactive capability in strategic communication, rather than a passive check on error” [1].

This shift aligns closely with Edward Bernays’ concept of influence:

“The conscious and intelligent manipulation of the organised habits and opinions of the masses is an important element in a democratic society” [2].

Today, fact-checkers often function as early enforcers of institutional consensus.

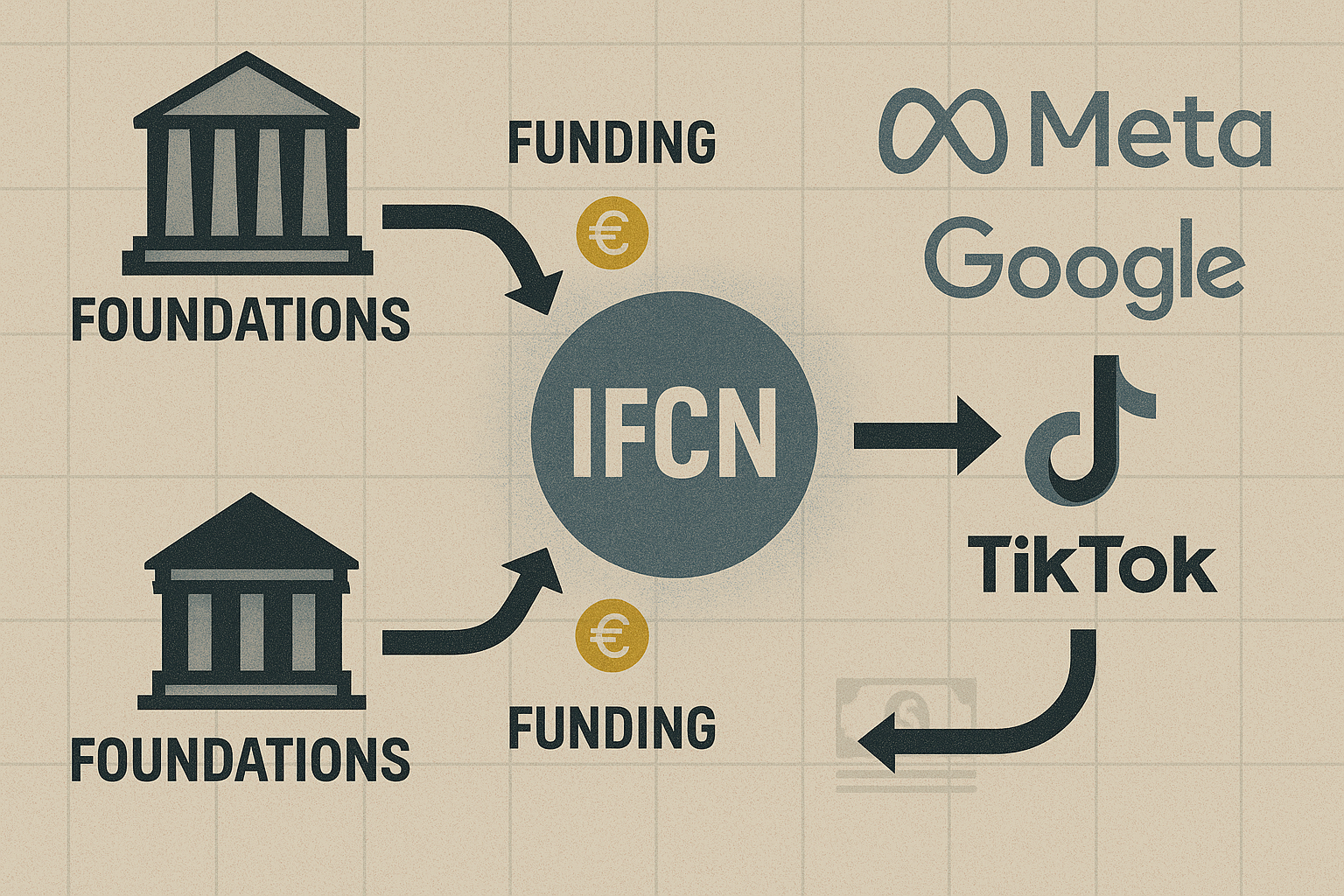

2. IFCN, Funding Loops, and Strategic Alignment

The so-called International Fact-Checking Network (IFCN) promotes standards of ethical independence and transparency. Nonetheless, its funding connects directly to major platforms, philanthropic foundations, and policy-oriented NGOs. RAND underscores this embedded dynamic:

“Fact-checking practices often reflect the institutional frameworks and risk calculations of the entities funding or hosting them” [3].

Walter Lippmann foresaw this environment a century ago.

“The real environment is altogether too big, too complex, and too fleeting for direct acquaintance,”. “We have to reconstruct it on a simpler model before we can manage with it” [4].

Fact-checking now builds this model on behalf of its institutional patrons.

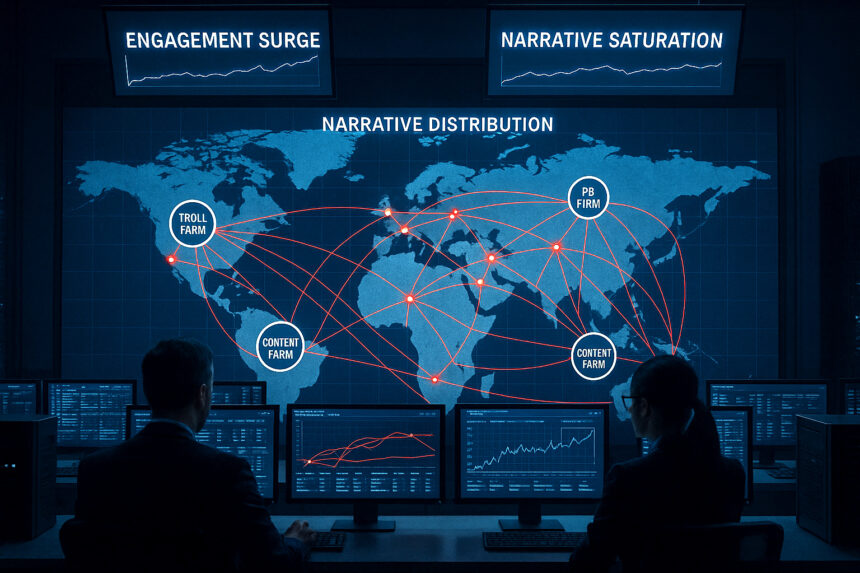

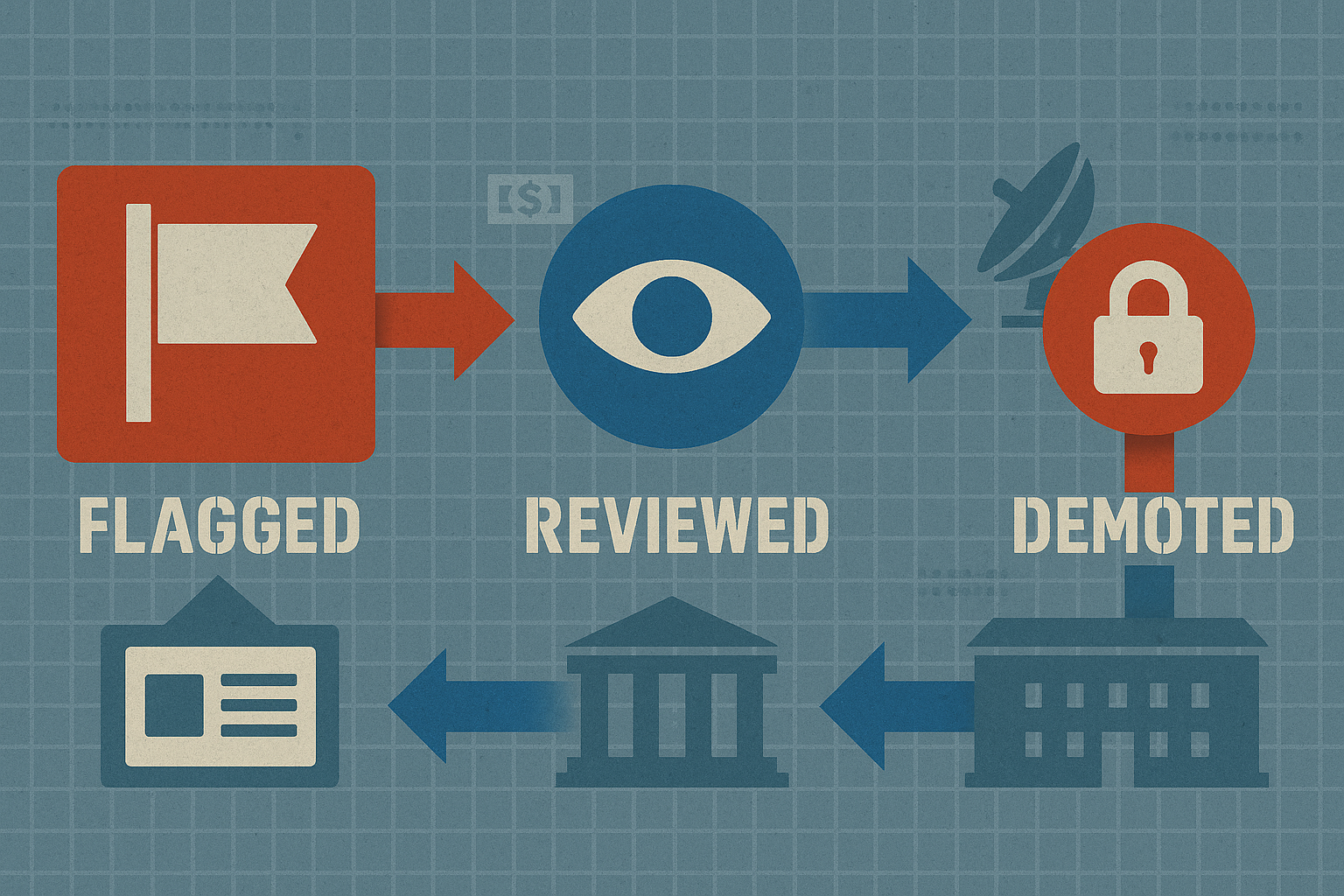

3. Platform Feedback Loops and Algorithmic Governance

Once flagged by fact-checkers, content triggers automatic demotion, demonetisation, or visibility suppression. Although these decisions appear technical, their logic is often strategic. RAND clarifies:

“The disinformation label is sometimes applied to content that is controversial or politically sensitive, rather than demonstrably false” [3].

Importantly, Marcellino’s research on hybrid detection models supports this claim.

“Classifiers struggle to distinguish between false narratives and oppositional framings that conflict with dominant policy views” [5].

In effect, platforms moderate for alignment rather than accuracy.

4. Strategic Consensus and Narrative Containment

In high-stakes geopolitical contexts, such as Ukraine, narrative framing becomes tightly policed. Statements about NATO, corruption, or historical legitimacy are often subject to immediate moderation, even when factually accurate. As Pamment explains,

“In environments of strategic uncertainty, counter-disinformation often merges with reputational management and influence operations” [1].

This convergence is not accidental. NATO’s StratCom Centre notes:

“Narrative dominance is a prerequisite for operational freedom in the information environment” [6].

Therefore, fact-checking serves as a tool for narrative containment, not merely correction.

5. The Pseudo-Environment and Engineered Legibility

Lippmann’s analysis remains prescient.

“What each man does is based not on direct and certain knowledge, but on pictures made by himself or given to him” [4].

Today, fact-checkers generate those pictures, curating visibility and shaping epistemic boundaries.

Bernays further warned:

“We are governed, our minds are moulded, our tastes formed, our ideas suggested, largely by men we have never heard of” [2].

Lim’s international review complements this insight:

“The boundaries of disinformation are increasingly defined by national security logics rather than epistemological certainty” [7].

Weaponised Fact-Checking as Governance Infrastructure

Fact-checking no longer operates on the margins of the information space. Instead, it anchors the governance infrastructure for digital legitimacy. Bernays articulated this plainly:

“Propaganda is the executive arm of the invisible government” [2].

Today’s fact-checking regime performs a function of narrative exclusion. It no longer seeks epistemic clarity. Instead, it enforces institutional orthodoxy by disqualifying dissent in advance. Consequently, the role of the verifier has shifted from error corrector to boundary enforcer.

Call to Action

Frontline Europa investigates the systems behind narrative control and Weaponised Fact-Checking. Support our forensic mission by subscribing to receive field-tested analysis, doctrinal exposure, and strategic insight. Our work is grounded in primary sources, not institutional talking points.

Alternatively, support our work by becoming a patron on our Patreon page for exclusive membership benefits and other perks.

References

- Pamment, J. (2022). A Capability Framework for Countering Disinformation

- Bernays, E. (1928). Propaganda

- Paul, C., et al. (2022). Russian Disinformation on Social Media. RAND Corporation

- Lippmann, W. (1922). Public Opinion

- Marcellino, W. (2020). Human–Machine Detection of Online-Based Malign Information

- NATO StratCom COE. (2019). Responding to Cognitive Security Challenges

- Lim, M. (2020). Disinformation as a Global Problem